WordPress is still cool, but you know what’s even cooler? Hosting WordPress in the cloud! For this series, I’m focusing on AWS. I’ll use Infrastructure as Code (IaC) and automation as much as possible!

In this post, I’ll cover how to deploy WordPress websites on AWS in the most cost-efficient and simplest way with a minimal setup for small-scale websites. This setup can handle hosting multiple small WordPress websites simultaneously on a single instance. Later in this series, I’ll write more about how to create more scalable and enterprise deployments for more complicated setups.

You can find the code for this post on this GitHub repository.

Who is This Post for?

Although I assume you have some initial knowledge about AWS, I’ll try to explain all the used resources briefly and provide links to the related AWS documentation for further information. Also, if you’re new to Terraform, don’t worry! You can use this post as an entry point. Just follow the process with me and check the provided links to Terraform documentation if you need to dive deeper into the concepts.

I assume you’re using a Unix-based OS like Linux or macOS, but you should be able to run most of the commands on a Windows machine without needing to change anything. If they don’t work, just Google or ask an AI for their equivalent.

Used Resources, Technologies and Stacks

We’ll use these resources from AWS for this deployment:

- Route 53: The domain name system to address DNS requests.

- VPC: The private cloud network being used by all the resources.

- EC2: To host the web server and the WordPress setup.

- S3 (optional): To act as Terraform remote backend and store the state.

We also use these tools and stacks in this project:

- Terraform: To automate the deployments and cloud resource management.

- AWS CLI: To configure access to AWS resources.

- OpenLiteSpeed AMI: To be used as the machine image on our EC2 instance with pre-installed OpenLiteSpeed, LSPHP, MariaDB (MySQL), phpMyAdmin, LiteSpeed Cache, and WordPress! You can either use the ready-to-use AMI from AWS Marketplace with a small monthly payment, or build your own custom, flexible, and free AMI that I explained earlier in this post: The Ultimate AWS AMI for WordPress Servers: Automating OpenLiteSpeed & MariaDB Deployment with Packer and Ansible. An important bonus for using this approach is having a proper backup solution in place, which is critical for this setup considering that we’re storing everything in a relatively fragile EC2 instance.

General Architecture

First of all, I need to remind us that this post is about one of the most simple but secure ways to host WordPress on AWS. Some other solutions might sound more scalable, robust, and secure. I’ll gradually post tutorials for more scalable enterprise setups in the future. I’ll also mention possible improvements related to each section or resource in this post.

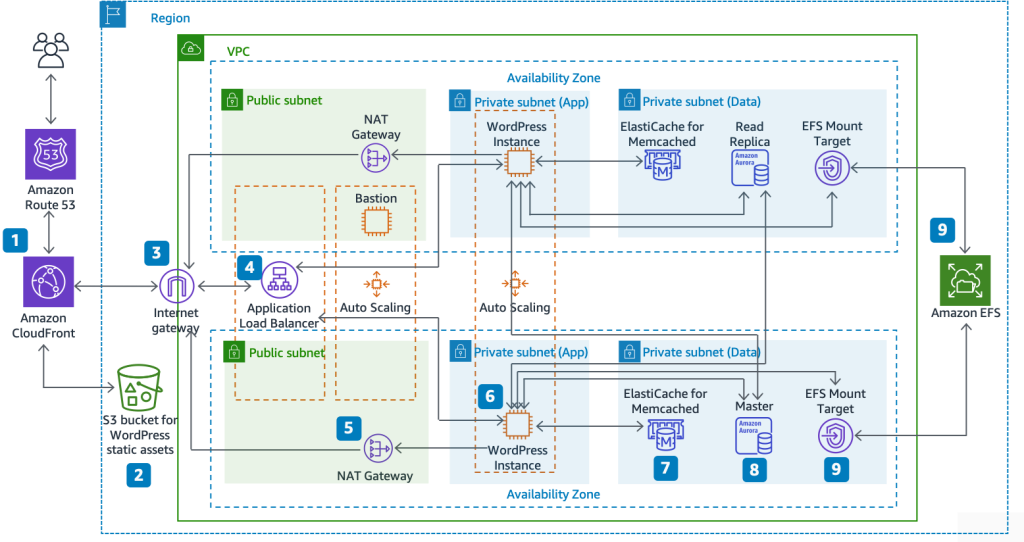

AWS has released a whitepaper about hosting WordPress and it has a reference architecture which looks like this:

As you can see, it’s quite complex and definitely overkill for a small business or personal website. Later in this series, I’ll definitely cover this architecture and automate it using Terraform, but for now we want to start small with the most basic components to host a working, fast, and secure WordPress website on AWS.

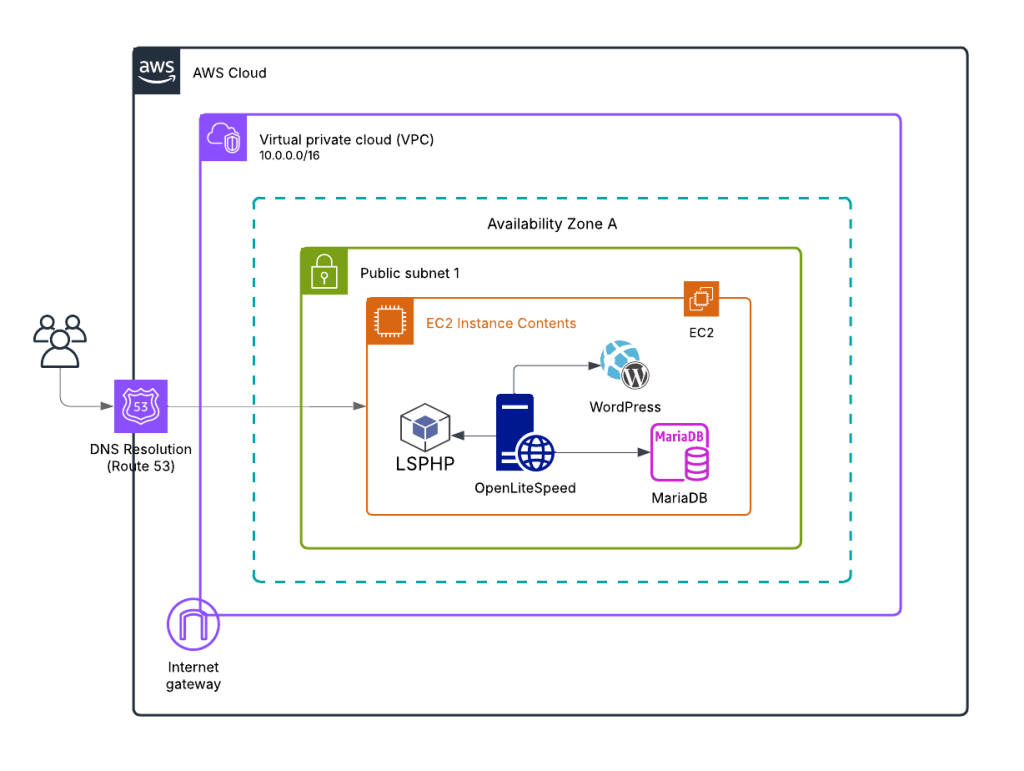

Here’s the overview of the architecture that we’re going to follow in this tutorial:

As you can see, it’s a lot simpler than the reference architecture. I made these simplifications compared to the AWS reference architecture:

- Removed CloudFront (CDN layer)

- Skipped storing static files on S3

- Removed Application Load Balancer

- Removed NAT Gateway

- Used a single public subnet to provide connectivity to all the components and removed the private subnets

- Used a single replica setup instead of the Auto Scaling group of Amazon EC2 instances

- Used a DB instance (MariaDB) inside the web server (EC2) instance instead of using separate instances for DB or using AWS RDS service

- Hosted WordPress files inside the EC2 instance instead of EFS

- Removed ElastiCache for Memcached

Monthly Costs

Let’s talk numbers before we dive into the implementation. One of the main advantages of this minimal setup is cost-effectiveness. Here’s a rough breakdown of what you can expect to pay monthly:

- EC2 instance: Around $18 for a t3.small or similar instance running 24/7

- Route 53: Approximately $0.50 for DNS management

- VPC, networking and other EC2-related resources: About $5 per month

This adds up to roughly $24 per month for the entire setup. Keep in mind that these aren’t exact numbers and might differ from region to region. Also, these figures don’t include applicable taxes, which will vary based on your location and billing address.

The best part? This setup isn’t limited to a single WordPress website! You can actually host multiple small websites using this configuration, as long as they don’t have a lot of visitors or overlapping peak times. OpenLiteSpeed is efficient enough to handle several low-traffic sites on a single instance, making this an extremely cost-effective solution for freelancers, small agencies, or hobbyists managing multiple projects.

If your sites start gaining more traffic or if performance becomes an issue, that’s when you might need to consider scaling up to the more robust architectures I’ll cover in future tutorials. But for getting started or for sites with modest traffic, this $25/month solution is hard to beat!

Why OpenLiteSpeed?

OpenLiteSpeed is an open-source version of LiteSpeed Web Server, and it’s becoming increasingly popular for WordPress hosting. But why am I choosing it for this setup? Let me break it down:

First, it’s blazingly fast! OpenLiteSpeed consistently outperforms other web servers like Apache and Nginx in benchmarks, especially for WordPress sites. This performance boost comes from its event-driven architecture and optimized processing of dynamic content.

Second, it has native caching capabilities through the LSCache plugin for WordPress. This is a game-changer for WordPress performance, offering server-level caching that’s much more efficient than plugin-based solutions. And the best part? It’s completely free, unlike the commercial LiteSpeed version which requires licensing fees.

Third, it’s secure and stable. OpenLiteSpeed comes with built-in security features and is regularly updated to address vulnerabilities. Its resource efficiency means your small EC2 instance won’t be overwhelmed even during traffic spikes.

Fourth, it’s surprisingly easy to set up and manage, especially when using a pre-configured AMI as I had explain in this tutorial. The web-based admin interface makes configuration a breeze compared to editing text files in Apache or Nginx.

Finally, it offers excellent PHP handling through LSPHP (LiteSpeed PHP), which is optimized for performance and memory usage. This means your WordPress site will run more efficiently on smaller (and cheaper!) EC2 instances.

While Apache might be more widely used and Nginx is popular for its reverse proxy capabilities, OpenLiteSpeed gives us the perfect balance of performance, ease-of-use, and cost-effectiveness for our minimal WordPress setup. It’s like having enterprise-level performance without the enterprise-level complexity or price tag!

Why Terraform?

Because I love it! It’s so simple and developer-friendly but powerful, modular, flexible, and extendable. I’ve spent hundreds of hours configuring and deploying cloud resources on AWS using CDK and CloudFormation. But in my personal opinion, Terraform shines in the IaC muddy ground!

Terraform’s declarative approach means you describe the desired state of your infrastructure, and it figures out how to make it happen. This is much more intuitive than writing procedural code or wrangling with YAML files that feel like they’re from another dimension.

Another huge advantage is that Terraform isn’t provider-specific. Once you learn the Terraform syntax and workflow, you can apply those skills to provision resources on AWS, Google Cloud, Azure, DigitalOcean, or dozens of other providers. It’s like learning one language that lets you speak to all the clouds!

The Terraform ecosystem is also incredibly rich with modules that you can reuse. Need a VPC with all the trimmings? There’s probably a module for that. Want to deploy a complex application? Someone’s likely already shared a module that gets you 80% of the way there.

For our WordPress setup, Terraform means we can spin up the entire infrastructure with a few commands, tear it down when we don’t need it (saving money!), and easily replicate it for different environments or clients. We can also easily add extra WordPress websites to our setup by simply updating our Terraform code – no need to manually configure new domains or virtual hosts. It’s the difference between building with Lego (structured, reusable pieces) versus sculpting with clay (custom but harder to modify).

Okay, let’s get our hands dirty and host a WordPress instance on AWS!

(more…)