WordPress is still cool, but you know what’s even cooler? Hosting WordPress in the cloud! For this series, I’m focusing on AWS. I’ll use Infrastructure as Code (IaC) and automation as much as possible!

In this post, I’ll cover how to deploy WordPress websites on AWS in the most cost-efficient and simplest way with a minimal setup for small-scale websites. This setup can handle hosting multiple small WordPress websites simultaneously on a single instance. Later in this series, I’ll write more about how to create more scalable and enterprise deployments for more complicated setups.

You can find the code for this post on this GitHub repository.

Who is This Post for?

Although I assume you have some initial knowledge about AWS, I’ll try to explain all the used resources briefly and provide links to the related AWS documentation for further information. Also, if you’re new to Terraform, don’t worry! You can use this post as an entry point. Just follow the process with me and check the provided links to Terraform documentation if you need to dive deeper into the concepts.

I assume you’re using a Unix-based OS like Linux or macOS, but you should be able to run most of the commands on a Windows machine without needing to change anything. If they don’t work, just Google or ask an AI for their equivalent.

Used Resources, Technologies and Stacks

We’ll use these resources from AWS for this deployment:

- Route 53: The domain name system to address DNS requests.

- VPC: The private cloud network being used by all the resources.

- EC2: To host the web server and the WordPress setup.

- S3 (optional): To act as Terraform remote backend and store the state.

We also use these tools and stacks in this project:

- Terraform: To automate the deployments and cloud resource management.

- AWS CLI: To configure access to AWS resources.

- OpenLiteSpeed AMI: To be used as the machine image on our EC2 instance with pre-installed OpenLiteSpeed, LSPHP, MariaDB (MySQL), phpMyAdmin, LiteSpeed Cache, and WordPress! You can either use the ready-to-use AMI from AWS Marketplace with a small monthly payment, or build your own custom, flexible, and free AMI that I explained earlier in this post: The Ultimate AWS AMI for WordPress Servers: Automating OpenLiteSpeed & MariaDB Deployment with Packer and Ansible. An important bonus for using this approach is having a proper backup solution in place, which is critical for this setup considering that we’re storing everything in a relatively fragile EC2 instance.

General Architecture

First of all, I need to remind us that this post is about one of the most simple but secure ways to host WordPress on AWS. Some other solutions might sound more scalable, robust, and secure. I’ll gradually post tutorials for more scalable enterprise setups in the future. I’ll also mention possible improvements related to each section or resource in this post.

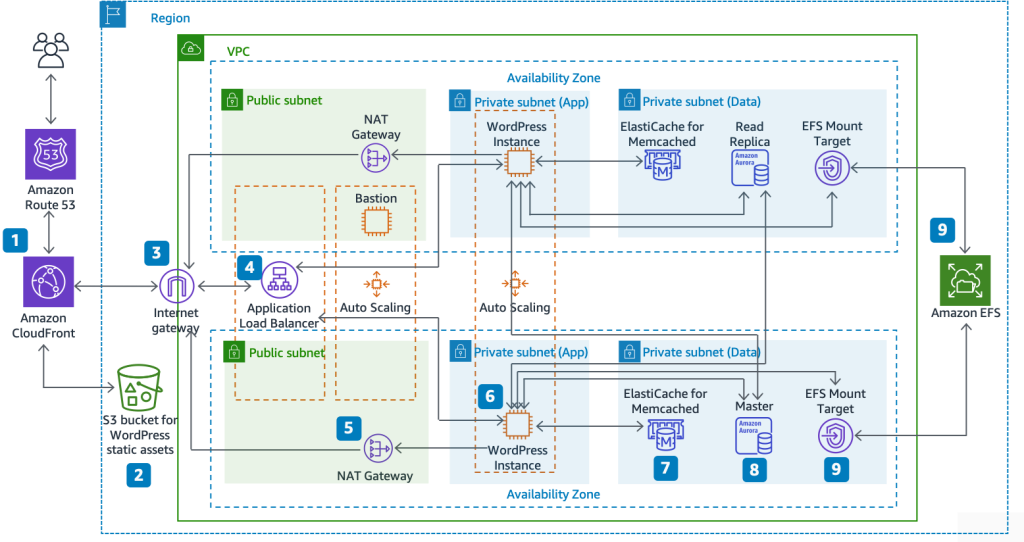

AWS has released a whitepaper about hosting WordPress and it has a reference architecture which looks like this:

As you can see, it’s quite complex and definitely overkill for a small business or personal website. Later in this series, I’ll definitely cover this architecture and automate it using Terraform, but for now we want to start small with the most basic components to host a working, fast, and secure WordPress website on AWS.

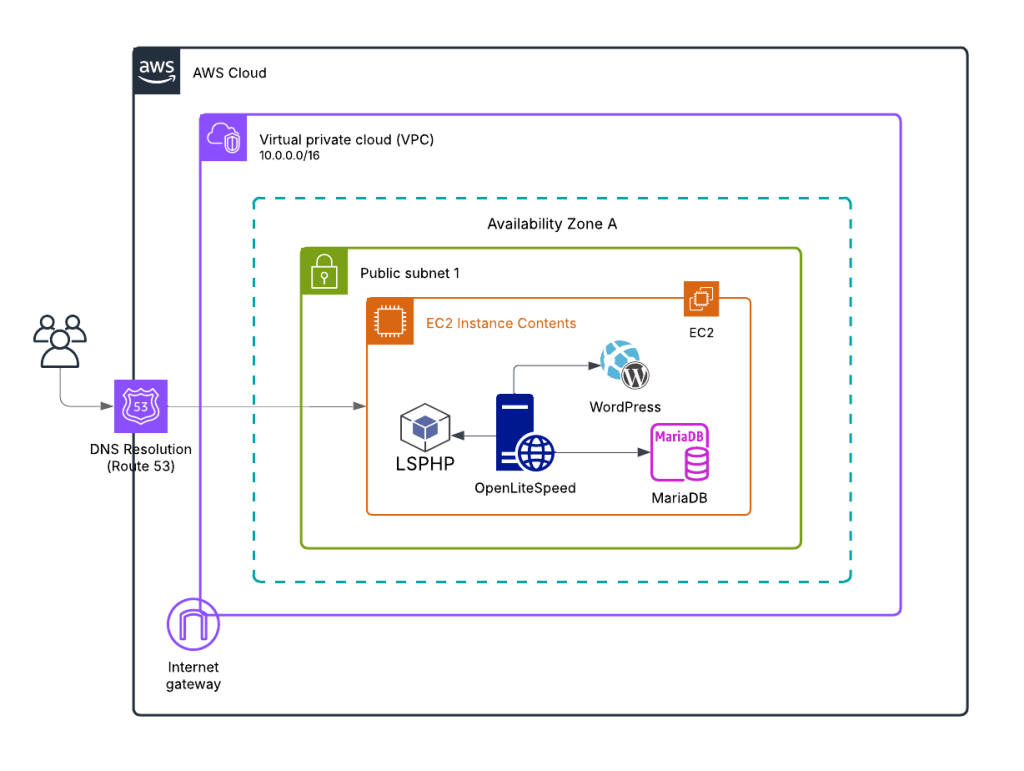

Here’s the overview of the architecture that we’re going to follow in this tutorial:

As you can see, it’s a lot simpler than the reference architecture. I made these simplifications compared to the AWS reference architecture:

- Removed CloudFront (CDN layer)

- Skipped storing static files on S3

- Removed Application Load Balancer

- Removed NAT Gateway

- Used a single public subnet to provide connectivity to all the components and removed the private subnets

- Used a single replica setup instead of the Auto Scaling group of Amazon EC2 instances

- Used a DB instance (MariaDB) inside the web server (EC2) instance instead of using separate instances for DB or using AWS RDS service

- Hosted WordPress files inside the EC2 instance instead of EFS

- Removed ElastiCache for Memcached

Monthly Costs

Let’s talk numbers before we dive into the implementation. One of the main advantages of this minimal setup is cost-effectiveness. Here’s a rough breakdown of what you can expect to pay monthly:

- EC2 instance: Around $18 for a t3.small or similar instance running 24/7

- Route 53: Approximately $0.50 for DNS management

- VPC, networking and other EC2-related resources: About $5 per month

This adds up to roughly $24 per month for the entire setup. Keep in mind that these aren’t exact numbers and might differ from region to region. Also, these figures don’t include applicable taxes, which will vary based on your location and billing address.

The best part? This setup isn’t limited to a single WordPress website! You can actually host multiple small websites using this configuration, as long as they don’t have a lot of visitors or overlapping peak times. OpenLiteSpeed is efficient enough to handle several low-traffic sites on a single instance, making this an extremely cost-effective solution for freelancers, small agencies, or hobbyists managing multiple projects.

If your sites start gaining more traffic or if performance becomes an issue, that’s when you might need to consider scaling up to the more robust architectures I’ll cover in future tutorials. But for getting started or for sites with modest traffic, this $25/month solution is hard to beat!

Why OpenLiteSpeed?

OpenLiteSpeed is an open-source version of LiteSpeed Web Server, and it’s becoming increasingly popular for WordPress hosting. But why am I choosing it for this setup? Let me break it down:

First, it’s blazingly fast! OpenLiteSpeed consistently outperforms other web servers like Apache and Nginx in benchmarks, especially for WordPress sites. This performance boost comes from its event-driven architecture and optimized processing of dynamic content.

Second, it has native caching capabilities through the LSCache plugin for WordPress. This is a game-changer for WordPress performance, offering server-level caching that’s much more efficient than plugin-based solutions. And the best part? It’s completely free, unlike the commercial LiteSpeed version which requires licensing fees.

Third, it’s secure and stable. OpenLiteSpeed comes with built-in security features and is regularly updated to address vulnerabilities. Its resource efficiency means your small EC2 instance won’t be overwhelmed even during traffic spikes.

Fourth, it’s surprisingly easy to set up and manage, especially when using a pre-configured AMI as I had explain in this tutorial. The web-based admin interface makes configuration a breeze compared to editing text files in Apache or Nginx.

Finally, it offers excellent PHP handling through LSPHP (LiteSpeed PHP), which is optimized for performance and memory usage. This means your WordPress site will run more efficiently on smaller (and cheaper!) EC2 instances.

While Apache might be more widely used and Nginx is popular for its reverse proxy capabilities, OpenLiteSpeed gives us the perfect balance of performance, ease-of-use, and cost-effectiveness for our minimal WordPress setup. It’s like having enterprise-level performance without the enterprise-level complexity or price tag!

Why Terraform?

Because I love it! It’s so simple and developer-friendly but powerful, modular, flexible, and extendable. I’ve spent hundreds of hours configuring and deploying cloud resources on AWS using CDK and CloudFormation. But in my personal opinion, Terraform shines in the IaC muddy ground!

Terraform’s declarative approach means you describe the desired state of your infrastructure, and it figures out how to make it happen. This is much more intuitive than writing procedural code or wrangling with YAML files that feel like they’re from another dimension.

Another huge advantage is that Terraform isn’t provider-specific. Once you learn the Terraform syntax and workflow, you can apply those skills to provision resources on AWS, Google Cloud, Azure, DigitalOcean, or dozens of other providers. It’s like learning one language that lets you speak to all the clouds!

The Terraform ecosystem is also incredibly rich with modules that you can reuse. Need a VPC with all the trimmings? There’s probably a module for that. Want to deploy a complex application? Someone’s likely already shared a module that gets you 80% of the way there.

For our WordPress setup, Terraform means we can spin up the entire infrastructure with a few commands, tear it down when we don’t need it (saving money!), and easily replicate it for different environments or clients. We can also easily add extra WordPress websites to our setup by simply updating our Terraform code – no need to manually configure new domains or virtual hosts. It’s the difference between building with Lego (structured, reusable pieces) versus sculpting with clay (custom but harder to modify).

Okay, let’s get our hands dirty and host a WordPress instance on AWS!

Prerequisites

We need to have these tools installed and configured to be able to proceed with the deployment.

AWS CLI

There are many options to provide access to Terraform to deploy the resources. One simple solution is to install and configure AWS CLI which creates appropriate profiles on your system that Terraform can later use.

Install AWS CLI by following the official AWS document. And then configure it to have access to the AWS account that you are going to deploy the resources.

aws configureFor the sake of simplicity, I suggest using a credential with administrator access. Of course, in a production environment, this deployment should be done in a CI/CD pipeline with least privilege IAM policies and roles.

If you have multiple AWS profiles configured, before running Terraform commands and deploying the resources, ensure the correct AWS profile is set by exporting the AWS_PROFILE environment variable:

export AWS_PROFILE=<AWS_PROFILE>Terraform

Follow the official Terraform documentation to install Terraform on your system. After a successful installation, you should be able to run the below command to see Terraform’s version:

terraform -versionFirst, create a new directory for your project like aws-wp so you can add all the later files and configurations to it.

Terraform Backend

In Terraform, we have a concept named backend which is the place Terraform keeps its state. You can keep the Terraform state locally or use a remote backend for it. For this project, I decided to keep the state remotely because it has some advantages including but not limited to:

- State Persistence & Collaboration: Remote backends store state centrally, preventing loss or corruption. Multiple team members can work on infrastructure without needing to manually share state files.

- State Locking & Consistency: Prevents simultaneous modifications to infrastructure by locking the state, reduces conflicts, and ensures only one user applies changes at a time.

One simple remote backend can be an S3 bucket to store the state and the state locking mechanism information. You can simply create these resources manually using AWS console or CLI.

However, I personally prefer to have a sort of init_infra project to also create these resources using Terraform! I use this pattern since later when I need to create other prerequisite resources like pipeline infrastructure and roles, I can add them to this init_infra. And I keep the state of this init project locally on my own machine.

Here for simplicity, we will use AWS CLI to create these resources.

Create an S3 bucket for Terraform state:

aws s3 mb s3://bugfloyd-websites-tf --region eu-central-1Use a unique name for the bucket. For this post, I am using bugfloyd-websites-tf. Also, use a region of your choice. I am using eu-central-1.

We also need to enable bucket versioning for this bucket to be able to recover Terraform state and to guard it against corruption and accidental deletion:

aws s3api put-bucket-versioning \

--bucket bugfloyd-websites-tf \

--versioning-configuration Status=Enabled \

--region eu-central-1Note that to have the locking mechanism starting from Terraform v1.10.0, there is no need to create a DynamoDB table and we will just add a new argument to the Terraform backend configuration.

IDE Terraform Extension

I recommend installing the Terraform extension for your IDE to get syntax highlighting, validation, and auto-fill on your IDE which can save a lot of time.

For VSCode, use the official extension from HashiCorp. For JetBrains IDEs, install the official Terraform and HCL plugin.

AWS Tags

You’ll notice that I add tags to almost all the resources I create. This helps me to later enable cost allocation for these tags and get clearer insight about the usage for each of these resources and resource categories. Feel free to define your own tags and update the names for the ones I used.

The AWS Cost Explorer and AWS Cost and Usage Report services can use these tags to break down your billing, making it much easier to understand which projects, clients, or environments are consuming your resources. It’s like having itemized receipts instead of one big bill!

Hosted Zones

I create Route 53 public hosted zones separate from the main infrastructure since we need to point the domain to the Name Servers (NS) of this hosted zone before proceeding with the main infra. This process may take a couple of hours to propagate, so it makes sense to decouple it from the main deployment.

You don’t know what a hosted zone is? Check this AWS doc.

Infrastructure as Code

Create a sub-directory aws-wp/hostedzones

First, we need to configure Terraform itself including the S3 key being used for its remote backend, the required minimum core version, and also the required providers to deploy the resources (AWS provider).

terraform {

backend "s3" {

key = "hosted-zones-state/terraform.tfstate"

encrypt = true

}

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.88"

}

}

required_version = ">= 1.10"

}Then we define a Terraform variable for the domain names, the provider to be used (AWS), and also Route 53 hosted zones. This file also includes the Terraform outputs to easily access the ID and NS of the created hosted zones after the deployment.

provider "aws" {

region = "eu-west-1"

default_tags {

tags = {

Owner = "Bugfloyd"

Service = "Bugfloyd/Websites"

}

}

}

variable "websites" {

description = "List of domains for which hosted zones should be created"

type = list(string)

}

resource "aws_route53_zone" "this" {

for_each = toset(var.websites)

name = each.value

tags = {

Name = "${join("", [for part in split(".", each.value) : title(part)])}HostedZone"

CostCenter = "Bugfloyd/Network"

Website = each.value

}

}

output "hosted_zone_ids" {

description = "The IDs for the hosted zones"

value = { for domain, zone in aws_route53_zone.this : domain => zone.id }

}

output "hosted_zone_name_servers" {

description = "The name servers for the hosted zones"

value = { for domain, zone in aws_route53_zone.this : domain => zone.name_servers }

}Note that I’m using for_each to loop through the var.websites variable, which is a list of domains (strings), and passing each value to the name argument. We do the same for tags to have unique tags for each resource.

Initialize Terraform

First, create a backend configuration file to store the remote backend information:

region = "eu-central-1"

bucket = "bugfloyd-websites-tf"Note: This file should not be committed to git! Add it to your .gitignore file.

Keep in mind that we have to use the same region and bucket name that we used in the above “Terraform Backend” section.

Now we can initialize the Terraform backend (state) by running this command in the hostedzones directory:

terraform init -backend-config backend_config.hclDeployment

You can pass the values for the variables in the command arguments, but I always find it easier to define a tfvar file named terraform.tfvars and put the values there to avoid long plan and apply commands.

websites = [

"bugfloyd.com"

]You can pass multiple values to this list variable to create and manage hosted zones for multiple domains.

Note: This file also should not be committed to git! Add it to your .gitignore file.

For Terraform deployments, I always recommend planning the changes first and then applying them. By doing so, you can review the changes in a dry-run and have a chance to review them before applying the actual changes to the cloud to avoid possible mistakes and data loss. Also, use an output file for the plan so that you can later use it to apply the actual changes.

To deploy the required hosted zones for our domains:

terraform plan -out zones.tfplan # Review the changeset

terraform apply zones.tfplan After a successful deployment, you will see the output including the hosted zone ID and name servers on your terminal:

hosted_zone_ids = {

"bugfloyd.com" = "Z09609554Y3NG7CAHTMB"

}

hosted_zone_name_servers = {

"bugfloyd.com" = tolist([

"ns-108.awsdns-13.com",

"ns-1285.awsdns-32.org",

"ns-1879.awsdns-42.co.uk",

"ns-531.awsdns-02.net",

])

}Domain Configuration

Now go to your domain registrar (like Namecheap, GoDaddy, Squarespace, etc.) and update the name servers of your domain to match the values from the hosted zones Terraform output. This will point your domain to the created hosted zone on AWS. Make sure to use all 4 name servers.

The DNS propagation takes some time, which can be up to 24-48 hours. However, if you don’t have higher TTL (Time To Live) values on the DNS records of the domain, you may see the result in a few minutes.

Just a heads-up, if you see the new NS values on your setup using a tool like nslookup or dig, it doesn’t mean that AWS also sees them! So I strongly recommend using a tool like dnschecker.org or whatsmydns.net to verify that the changes have been propagated in all regions. Check for NS records for your domain.

Continue with deploying the next steps only when you see the 4 NS values in all the regions in those tools. This is crucial because if the DNS hasn’t fully propagated, you might run into issues later when setting up SSL certificates, which depend on proper DNS validation.

Main Infrastructure

Now we create the main infrastructure needed to host WordPress!

Create a sub-directory aws-wp

Same as hosted zones infra, we need to first define the Terraform backend and its requirements.

terraform {

backend "s3" {

key = "bugfloyd-state/terraform.tfstate"

encrypt = true

use_lockfile = true

}

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.88"

}

}

required_version = ">= 1.10"

}

Then define some variables that we’re going to use in the infrastructure code. Check the description for each variable, and don’t worry if you don’t know why we need it! You’ll find out soon!

variable "region" {

default = "eu-west-1"

}

variable "ols_image_id" {

description = "The ID of the AMI to be used for EC2 instance"

type = string

}

variable "admin_ips" {

description = "IP address of the admin to be whitelisted to provide SSH access"

type = list(string)

}

variable "admin_public_key" {

description = "Public key of the admin to provide SSH access"

type = string

}

variable "domains" {

description = "Map of domain names to their Route 53 hosted zone IDs"

type = map(string)

}

Now create a main configuration file with the AWS provider defined in it.

provider "aws" {

region = var.region

default_tags {

tags = {

Owner = "Bugfloyd"

Service = "Bugfloyd/Websites"

}

}

}Note that the default_tags are going to be added to all the resources. But we will override the Service tag in some of the resources to have service-specific tags.

Network Infrastructure

Before anything else, we need to create the required network resources to be used in our web server instance.

resource "aws_vpc" "bugfloyd" {

cidr_block = "20.0.0.0/16"

enable_dns_support = true

enable_dns_hostnames = true

tags = {

Name = "BugfloydVPC"

CostCenter = "Bugfloyd/Network"

}

}

resource "aws_subnet" "public_a" {

vpc_id = aws_vpc.bugfloyd.id

cidr_block = "20.0.1.0/24"

availability_zone = element(data.aws_availability_zones.available.names, 0)

map_public_ip_on_launch = true

tags = {

Name = "BugfloydPublicSubnetA"

CostCenter = "Bugfloyd/Network"

}

}

resource "aws_internet_gateway" "internet_gateway" {

vpc_id = aws_vpc.bugfloyd.id

tags = {

Name = "BugfloydInternetGateway"

CostCenter = "Bugfloyd/Network"

}

}

resource "aws_route_table" "public_route_table" {

vpc_id = aws_vpc.bugfloyd.id

tags = {

Name = "BugfloydPublicRouteTable",

CostCenter = "Bugfloyd/Network"

}

}

resource "aws_route" "public_route" {

route_table_id = aws_route_table.public_route_table.id

destination_cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.internet_gateway.id

depends_on = [aws_internet_gateway.internet_gateway]

}

resource "aws_route_table_association" "public_subnet_a_association" {

subnet_id = aws_subnet.public_a.id

route_table_id = aws_route_table.public_route_table.id

}

data "aws_availability_zones" "available" {}Keep in mind that you can always use the default Virtual Private Cloud (VPC) of your region/account, but for better isolation, it’s recommended to create a separate VPC for each cloud application/project. Just a heads-up, there is a limitation for the number of VPCs that you can create on your account, but this limit can be increased by requesting a quota increase.

For this setup, we only need to create a single public subnet since we’re going to place our web server in this subnet with a public IP address. If you want to strictly follow cloud and networking best practices for security and scalability, it’s recommended to connect the web server (application) to a private subnet behind a NAT Gateway. I will cover such a setup later in a separate post.

What is a subnet? Check AWS docs!

Worth mentioning, I use 20.0.0.0/16 as the CIDR block for the VPC. If this block is already being used on your account by other networks, feel free to change it to something else. And the CIDR block for the subnet (20.0.1.0/24) should be within the range of the VPC block.

Our web server instance needs internet access to perform various tasks: updating server packages, WordPress plugins, or connecting to third-party services. To enable internet access in our VPC, we need to add an internet gateway. The defined internet gateway by itself is like a door, but we need to also create the path (route) to this door. We do this by creating a route table which acts like an address book to keep track of our routes. Then we add a route so that AWS can direct packets with the destination of 0.0.0.0/0 (the whole internet!) to pass through that door (internet gateway). And to connect the defined route table and the gateway to the subnet, a route table association is defined so that the instances in the subnet can communicate with the outside world.

Also be aware that in the ideal scenario (public and private subnets + NAT gateway), it’s recommended to have multiple public and multiple private subnets in different Availability Zones (AZ) to have maximum availability. But in this simple setup, we just add a single subnet to one of the AZs of the region. To select this zone (for example, eu-central-1a) without hard-coding the AZ name, I used a data resource of Terraform to import all the availability zones of the region and select the first one.

Web Server Instance

Finally! We can now define our core component – the web server hosting WordPress!

First, let’s create a network interface that we can attach to the web server instance to provide network connectivity. We’ll also restrict the OpenLiteSpeed web console and SSH access to admin IPs only, while allowing global access to HTTP and HTTPS ports.

resource "aws_network_interface" "webserver" {

subnet_id = aws_subnet.public_a.id

security_groups = [aws_security_group.ec2_web.id]

tags = {

Name = "WebserverInstanceNetworkInterface"

CostCenter = "Bugfloyd/Websites/Instance"

}

}

# Security Group for EC2 Instance

resource "aws_security_group" "ec2_web" {

name = "WebsitesInstanceSecurityGroupWeb"

description = "Security Group for WordPress EC2 to allow HTTP from CloudFront only"

vpc_id = aws_vpc.bugfloyd.id

ingress {

description = "Allow HTTP from anywhere"

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

description = "Allow HTTPS from anywhere"

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

description = "Allow TCP 7080 from admin"

from_port = 7080

to_port = 7080

protocol = "tcp"

cidr_blocks = var.admin_ips

}

ingress {

description = "Allow SSH from Instance Connect"

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = var.admin_ips

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "WebsitesInstanceSecurityGroupWeb"

CostCenter = "Bugfloyd/Websites/Instance"

}

}

Here we’ve created a network interface connected to our public subnet and attached a security group to it for access control. Security groups act as a basic cloud firewall in AWS. This configuration allows:

- The entire internet to connect to our instance on TCP port 80 (HTTP) and 443 (HTTPS)

- Admin access only to OpenLiteSpeed’s admin web console (TCP port 7080)

- SSH access (TCP port 22) restricted to admin IPs only

- Unrestricted outbound traffic from the instance to anywhere

If you don’t have a static IP to specify in var.admin_ips, you could temporarily use ["0.0.0.0/0"] as the cidr_blocks to allow worldwide access to your admin console and SSH – but this is STRONGLY not recommended for security reasons! A better approach would be to use a secure VPN with a static IP, allowing you to safely SSH into the instance and configure OpenLiteSpeed.

Now we can finally define our web server EC2 instance:

resource "aws_key_pair" "websites_key_pair" {

key_name = "WebsitesKeyPair"

public_key = var.admin_public_key

tags = {

Name = "WebsitesInstanceKeyPair"

CostCenter = "Bugfloyd/Websites/Instance"

}

}

resource "aws_instance" "webserver" {

ami = var.ols_image_id

instance_type = "t3.small"

key_name = aws_key_pair.websites_key_pair.key_name

network_interface {

network_interface_id = aws_network_interface.webserver.id

device_index = 0

}

root_block_device {

volume_size = 20

}

tags = {

Name = "WebserverInstance"

CostCenter = "Bugfloyd/Websites/Instance"

}

}

resource "aws_route53_record" "main_dns_record" {

for_each = var.domains

zone_id = each.value

name = each.key

type = "A"

ttl = 300

records = [aws_instance.webserver.public_ip]

}

resource "aws_route53_record" "www_dns_record" {

for_each = var.domains

zone_id = each.value

name = "www.${each.key}"

type = "A"

ttl = 300

records = [aws_instance.webserver.public_ip]

}

output "webserver_instance_ip" {

description = "The public IP address of the webserver EC2 instance"

value = aws_instance.webserver.public_ip

}First, we create an EC2 key pair and pass our public key to it via the variables. Then the main EC2 instance is defined.

Here we’re also adding two DNS record resources to connect our domain names to our EC2 instance:

- The first resource (

main_dns_record) creates A records for the root domains (e.g., example.com) - The second resource (

www_dns_record) creates A records for the www subdomains (e.g., www.example.com)

Both records point to the public IP address of our webserver instance, so visitors can access our WordPress sites whether they include “www” or not.

The for_each = var.domains loop allows us to create these records for multiple domains simultaneously. Since var.domains is a map of domain names to their Route 53 hosted zone IDs, this code will iterate through each domain in the map and create corresponding DNS records for both the root domain and www subdomain.

We can define some outputs for our resources to immediately get the important details after deployment right in our terminal. For now, I’ve included a single output to print the IP address of the deployed Web Server instance.

Choosing the Right AMI for the Web Server

Each EC2 instance needs an Amazon Machine Image (AMI) to boot and function. Think of the AMI as the operating system with pre-installed software and packages. It can be an AWS-managed image like Amazon Linux, or a specific distribution like Ubuntu.

For our web server, we have a few options:

- Use bare-minimum ready AMIs: Start with a basic AMI like Amazon Linux or Ubuntu, then SSH into the instance after deployment to install and configure OpenLiteSpeed, MySQL, phpMyAdmin, and WordPress. This option isn’t very scalable or maintainable and is difficult to automate.

- Build and store our own custom AMI: This is the recommended approach for scalable and manageable deployments. Set up an instance once with all your required configurations, then create an AMI from it to use for future deployments. I covered this topic in detail in my post: The Ultimate AWS AMI for WordPress Servers: Automating OpenLiteSpeed & MariaDB Deployment with Packer and Ansible.

- Use a pre-built AMI: There are many ready-to-use AMIs in the AWS Marketplace. You subscribe to these AMIs and typically pay an hourly or monthly fee, although some offer free trials.

If you want to build your own free custom AMI, follow the mentioned post and create an AMI in the same region as the one you are deploying WordPress resources and at the end get the AMI ID which is a string like ami-08f1f8c9eeed82ca6 and skip to the next section.

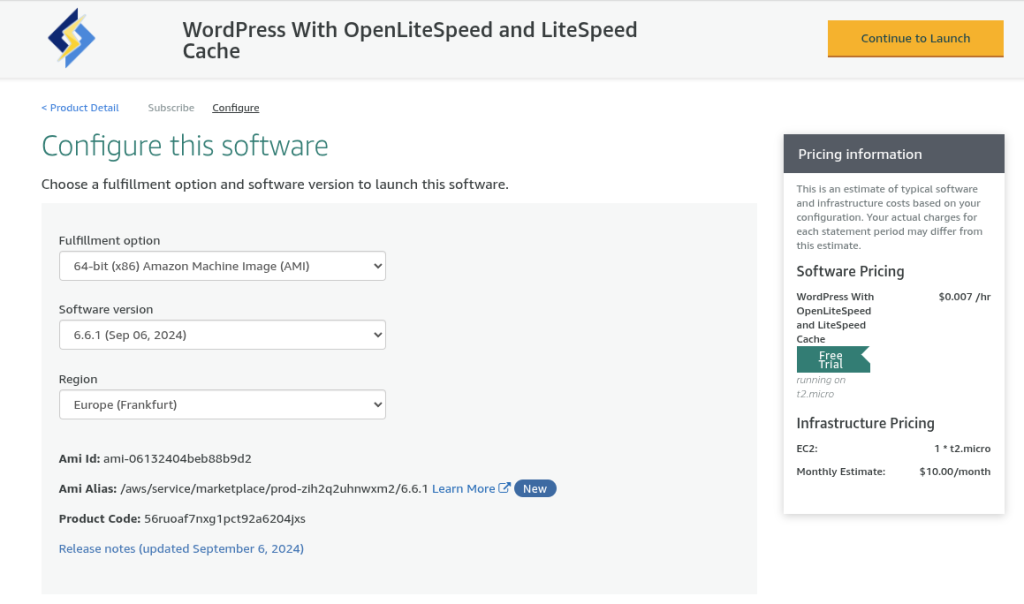

If you decide to continue with option 3, for simplicity in this post, we use an AMI from AWS Marketplace officially distributed by LiteSpeed Technologies Inc. This AMI is based on Ubuntu 24.04 and is called WordPress With OpenLiteSpeed and LiteSpeed Cache. It includes these components pre-installed and configured:

- OpenLiteSpeed

- MariaDB

- phpMyAdmin

- LiteSpeed Cache

- memcached

- redis

- Certbot

- Postfix

- WordPress

The subscription costs $0.007 per hour (about $5 per month). To use it, open its page on AWS Marketplace, accept the terms, and subscribe to the product. Then click “Continue to Configuration.” When prompted, DO NOT launch an instance directly. Instead, note the AMI ID provided for the latest version in your chosen region. We’ll use this ID when deploying our resources.

In my case, the ID I need is ami-06132404beb88b9d2, but this changes and may be different for you, so make sure to use the ID you get from the AWS Marketplace.

After subscribing, you have a 7-day trial period. You can manage and cancel active subscriptions via the Marketplace: Manage Subscriptions page in the AWS console.

I personally use a custom AMI for my websites, which is more flexible and free! You can follow this post using the marketplace AMI, and later decide whether to keep using it or switch to another option. Or, check out my other post on building your own free custom AMI, then come back here and use that instead!

Choosing the Right EC2 Instance Size for WordPress

I’m using a t3.small instance type, but you can choose another based on your needs. I recommend sticking with T3 (Intel-based) or T3a (AMD-based) types as they offer a good balance of price and performance. Check out the AWS instance type naming conventions for more details. You can find the available sizes for T3 and T3a families on the EC2 Instance Types page.

Avoid nano-sized instances as they have insufficient memory for a web server. If you opt for micro instances, be aware that you might encounter memory issues that could cause crashes and reboots. I’ve found the small size to be the most reliable and cost-efficient option for most use cases.

Also, if you feel the need to use one of the bigger sizes like 2xlarge, you might need to reconsider your system design and architecture. At that point, a distributed architecture with separate database servers might be more cost-effective and reliable.

Here’s a quick reference for recommended instance types:

| Name | vCPUs | Memory (GiB) |

t3.small, t3a.small | 2 | 2.0 |

t3.medium, t3a. | 2 | 4.0 |

t3.large, t3a.large | 2 | 8.0 |

t3.xlarge, t3a.xlarge | 4 | 16.0 |

Even small-sized instances can handle hosting 5-10 WordPress websites with moderate traffic. We’ll also use LiteSpeed cache plugin which stores a cached version of the website pages and assets on the web server and serves them instead of generating dynamic responses by WordPress which can also help with reducing the load on the instance.

However, if you’re running resource-intensive applications like WooCommerce stores with many active buyers, you’ll need to handle dynamic requests efficiently, and a small instance might not be sufficient. My advice: start with a small instance, monitor resource usage, and scale up if you see consistent high utilization.

Remember that disk size isn’t coupled with instance size – you can add disk space to any instance type. In our setup, I’ve specified a 20GiB volume for the root partition, which is sufficient for most small WordPress deployments.

You can check AWS pricing for on-demand EC2 instances for the most current cost information.

Deployment

We made it! Now it’s time to deploy and test our setup. We’ll follow the same steps we used when deploying the hosted zones.

Initialize Terraform

First, create a backend configuration file to store the remote backend information using the same region and bucket name that we used in the “Terraform Backend” section:

region = "eu-central-1"

bucket = "bugfloyd-websites-tf"Note: This file should not be committed to git! Add it to your .gitignore file.

Now we can initialize the Terraform backend (state) by running this command in the infra directory:

terraform init -backend-config backend_config.hclDeployment Configuration

As before, we’ll define a tfvar file named terraform.tfvars and put our values there. For example:

region = "eu-central-1"

ols_image_id = "ami-06132404beb88b9d2" # Your AMI ID

admin_ips = ["X.X.X.X/32", "Y.Y.Y.Y/32"]

admin_public_key = "ssh-rsa AAA...32U= bugfloyd@laptop"

domains = {

"bugfloyd.com" = "<HOSTED ZONE ID FROM EARLIER DEPLOYMENT>"

}A few important notes about these variables:

- For

ols_image_id, use the ID you got earlier from AWS Marketplace or the ID of your own custom AMI. - Make sure to use the same region that the AMI belongs to.

- It’s recommended to pass the

admin_ipsin single-host CIDR notation (adding/32at the end of each IP). - For

admin_public_key, use your public key value. It’s normally stored in a location like~/.ssh/id_rsa.pub. If you don’t have one, create one using thessh-keygencommand.

Note: This file should not be committed to git! Add it to your .gitignore file.

Deploying the Resources

To deploy the resources to AWS:

terraform plan -out main.tfplan # Review the changeset

terraform apply main.tfplan After a successful deployment, you’ll see the output including the public IP address of your web server instance:

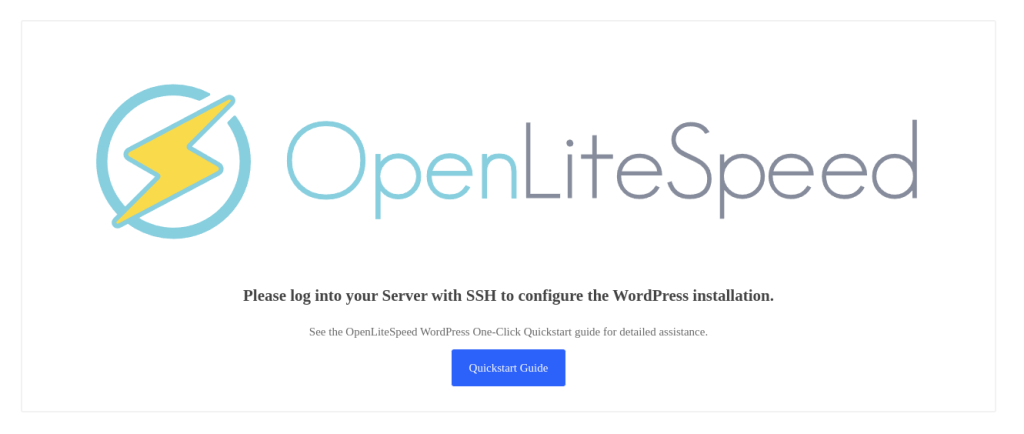

webserver_instance_ip = "XX.XX.XX.XX"Yaayy! It’s deployed! If the deployment was successful you should be able to see the OpenLiteSpeed’s welcome page by visiting your domain or the instance IP in a browser.

Now let’s configure it!

Web Server & WordPress Configuration

Note: In this section, I’m assuming you’re using the OLS AMI from the third option in the “Choosing the Right AMI for the Web Server” section. If you’ve built a custom AMI following the second option and used it for the current deployment, you should instead check out “Verify the Deployment” and the sections after it in that post.

Now we can configure the web server to properly serve our WordPress websites.

For reference, always check the official LiteSpeed documentation about this image and its configuration. This documentation will remain useful even if you switch to a custom solution but continue using OpenLiteSpeed.

I won’t repeat the entire documentation here, but make sure to complete these main steps:

SSH into the instance:

ssh ubuntu@<INSTANCE_IP>Run the initial configuration:

On your first SSH session, the image automatically runs a configuration script that asks you several questions to set up your first WordPress website:

- Your domain: Enter the domain name without protocol and www subdomain (e.g.,

bugfloyd.com) - Please verify it is correct: y

- Do you wish to issue a Let’s encrypt certificate for this domain? y

- Please enter your E-mail: your@email.address

- Please verify it is correct: y (Then wait for the SSL certificate request)

- Do you wish to force HTTPS rewrite rule for this domain? y

- Do you wish to update the system now? y (Then wait for the update to complete)

Access important passwords:

Database passwords are stored in a file named .db_password under the ubuntu user’s home directory. OpenLiteSpeed’s admin password is stored in a file named .litespeed_password in the same location. Retrieve these passwords and then delete these files for security:

cat ~/.db_password

cat ~/.litespeed_password Configure the firewall:

By default, Ubuntu’s firewall (ufw) is enabled without an allow rule for the OLS admin console port. Generally, it’s best practice to enable this port only when needed and disable it afterward. However, since we’ve already restricted access to this port via AWS security groups (limiting it to our own IP address), we can keep the port open on the instance firewall:

sudo ufw allow 7080If you prefer an extra layer of security, you can instead allow access only from your specific IP address at the instance level as well:

ufw allow from <YOUR_IP> to any port 7080Reload ufw:

sudo ufw reloadNow you can access the OLS admin console by visiting https://<INSTANCE_IP>:7080.

To upload new files, use SFTP as explained in the LiteSpeed documentation, and don’t forget to update file owners and permissions after uploading.

The LiteSpeed documentation contains tons of useful information about accessing and securing phpMyAdmin, migrating existing websites, troubleshooting issues, and much more. One particularly helpful feature is the automated script they provide for adding new virtual hosts (websites) to your server. This means that to add a new website to your setup, you can:

- Add the domain to the hosted zones infrastructure

- Deploy it and get the hosted zone ID

- Add the domain and hosted zone ID to the main infrastructure variables

- Deploy the updated infrastructure

- Run the LiteSpeed script to configure the new virtual host

Also, be aware that this image includes several helpful management scripts under /usr/local/lsws/admin/misc. One notable example is a script that lets you reset your admin password if you forget it:

/usr/local/lsws/admin/misc/admpass.sh Install WordPress

Once you’ve completed the initial setup and configured OpenLiteSpeed, it’s time to check out your new WordPress site!

Now if you head to your website domain, you should see the lovely WordPress installation wizard! The best part is that you don’t even need to enter database connection details, as those are already configured in your wp-config.php file by the initializer script.

Just follow the standard WordPress setup steps to:

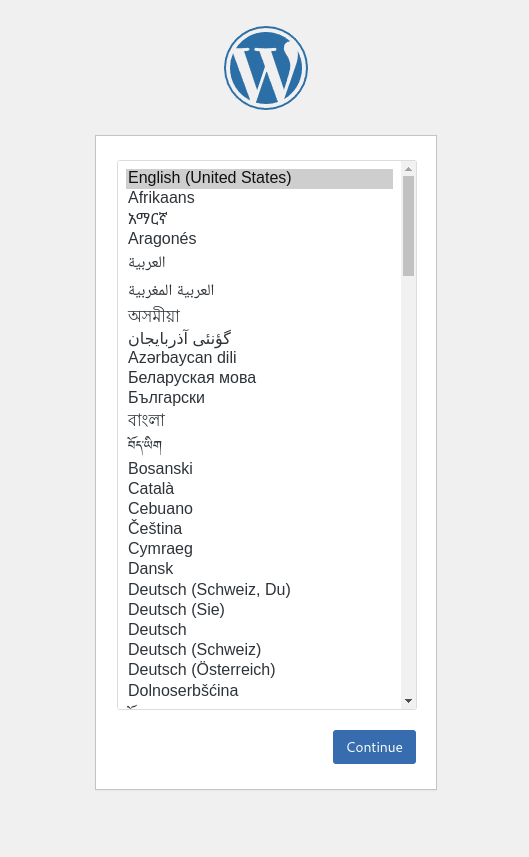

- Choose your site language

- Create your admin account

- Set your site title

- Complete the installation

Backup Solution

Having a proper backup solution is a critical aspect of any application, web server, and deployment. This is especially important in our case since, for simplicity, we decided to host WordPress files and database data on the instance’s local storage. This approach is inherently risky because EC2 instances are relatively fragile, and you shouldn’t rely on their local storage to persist critical data like databases.

To safely continue using this simple approach, I HIGHLY RECOMMEND adding a proper backup solution for your setup. You have two main options:

- Use a WordPress backup plugin like UpdraftPlus, WPvivid, or BackWPup

- Implement a server-level backup solution

For many reasons, I prefer the second option. I’ve explained these reasons and built a custom backup solution for OpenLiteSpeed and MariaDB servers in my post: Build a Robust S3-Powered Backup Solution for WordPress Hosted on OpenLiteSpeed Using Bash Scripts.

If you’ve followed the custom AMI solution while choosing your AMI, you already have this backup solution in place! You can start using it by following the “How to Use the Backup System” section in that post.

However, if you decided to use the OLS AMI from AWS Marketplace, you can add the backup scripts that I developed by following the “Deployment” section of its GitHub repository. I suggest using the Ansible Deployment option for the smoothest experience.

Remember: In cloud deployments, always assume that your instance could disappear at any moment. Having reliable, tested backups is not optional—it’s essential for peace of mind and business continuity!

Conclusion

Congratulations! You’ve successfully deployed a minimal yet powerful WordPress hosting solution on AWS using Terraform and OpenLiteSpeed. This setup strikes an excellent balance between cost-effectiveness, performance, and ease of management—perfect for small businesses, personal blogs, or freelancers managing multiple sites.

Let’s recap what we’ve accomplished:

- Created a secure and isolated network environment using AWS VPC

- Deployed a high-performance OpenLiteSpeed web server on EC2

- Configured DNS management with Route 53

- Set up WordPress with database access

- Managed everything through Infrastructure as Code with Terraform

The beauty of this solution is its flexibility. You can start small with a single WordPress site and easily expand to host multiple sites on the same infrastructure. And when your sites grow in popularity or complexity, you can either scale up the instance size or graduate to the more robust architectures we’ll cover in future posts.

Phew! I know this was a long post (and sadly, there’s no potato at the end—sorry about that!), but I hope you found it worthwhile. Building infrastructure properly takes time, but the foundation we’ve laid here will save you countless hours down the road.

Remember that while this setup is minimal, it doesn’t compromise on the essentials. You’re getting enterprise-grade performance from OpenLiteSpeed, the reliability of AWS infrastructure, and the convenience of Terraform automation—all for about $25 per month.

A few important takeaways:

- Always maintain backups – Whether you use the S3 backup solution I mentioned or a WordPress plugin, make this your top priority

- Monitor your resources – Keep an eye on CPU, memory, and disk usage to know when it’s time to scale

- Keep security in mind – Regularly update WordPress, plugins, and the server itself

- Use proper credentials management – Don’t leave sensitive information in plain text files

I hope this guide has shown you that cloud hosting doesn’t have to be complicated or expensive. With the right tools and approach, even beginners can create professional, reliable WordPress hosting environments on AWS.

Happy hosting, and see you in the next tutorial where we’ll explore more advanced AWS architectures for scaling WordPress!

Leave a Reply